People of ACM - David B. Papworth

June 13, 2023

What Intel chip preceded the P6, and why was the P6 a significant improvement over the existing state-of-the art?

The P6 development program started in June 1990, in Hillsboro, Oregon. At that time, Intel’s leading microprocessor was the Intel 80486, and Intel was developing the Pentium processor in Santa Clara, CA, (which ultimately launched in 1993).

The P6 microprocessor implemented a form of data-flow processing which we called “dynamic execution.” The major features were register renaming, out-of-order execution, wide super-scalar, and deep pipelining. These features allowed the hardware to exploit the parallelism in the underlying applications, without regard to artificial constraints imposed by the x86 instruction set. At its launch in November 1995, the P6 was competitive with the best of RISC technology, offered all the cost efficiencies of the PC platform, and could run the vast library of software developed for the x86 platform over the previous decade.

How was the P6 developed?

The P6 development program was a huge effort, involving 500+ people over five years. It was the biggest engineering program I ever worked on. We had teams of architects, logic designers, circuit designers, layout designers, validators, and package and test engineers. In those days, it was more common to do transistor-level circuit design and hand-layout of fabrication masks. This helped to increase clock speed and density but came at the cost of increased effort and time. As architects, we had to predict where process technology would be five years in the future and constantly balance correctness, schedule, die size, power and performance so the product could hit that target.

What was the greatest challenge in developing the P6, and how did you overcome it?

The biggest challenge of the P6 was getting such a complex product out the door while minimizing the chances of latent bugs and potential recalls.

The very factors that make “PC compatible” processors commercially attractive also make for very high correctness expectations from the end user. The ability to run lots and lots of software from many different vendors coupled with the low costs of the platform means that the product ramps to high volumes quickly. Serious hardware bugs discovered post-production could mean ruinously expensive recalls.

A semiconductor vendor like Intel sells microprocessors. Others integrate them into platforms, and still others provide software. The end user experiences the combination of processor, platform, operating system, and software all working together. Contrast Intel’s situation with that of a platform vendor like Apple or Google. A platform vendor has direct control over more ingredients and can potentially make changes in the platform, operating system, or software to overcome any problems in the silicon. End users of Intel processors generally expect Intel to “make right” any problems encountered on the microprocessor, and fixing bugs with software workarounds can present business and PR challenges.

We overcame this challenge on the P6 through several parallel efforts. We created high-performance simulators to enable extensive pre-silicon validation. We created special validation platforms to enable high volume post-silicon testing. We put multiple “defeature” modes into every functional unit on the chip to enable continued validation in the face of a discovered blocking bug. We devised a “microcode update” facility to allow the hardware to be re-configured during debug and post-production, and worked with OS and BIOS vendors to enable these updates to be rolled out to end users. In the end, while there were scares and problems to be worked around, we avoided any major recalls with the P6 and its follow-on products.

Researchers at Intel recently announced plans for a trillion-transistor processor by 2030. What key developments have made this possible? What is an example of another important trend you see in the development of microprocessors in the near future?

The key developments for the trillion-transistor processor are the Ribbon-Fet (GAA) improved geometry transistor, EUV (Extreme Ultraviolet) steppers with high numerical aperture for smaller feature size, PowerVia technology for backside power delivery, 3D packaging of multiple chiplets, and the EMIB die-to-die interconnect. Possible additional ingredients include Ferro-Electric Ram and Gallium-Nitride-silicon technology.

I see a trend towards significant changes in the way all these transistors are utilized. Using traditional microarchitecture, we may find we can’t use all these transistors because of power delivery and cooling challenges. In a future era of “Big Data” and AI focused processing, I see multi-tiered low power or non-volatile memory, combined with high-density interconnect, as enabling “bring computing to the data” rather than the more traditional “bring data to the computing.” Another possible option is dynamic reconfiguration of the computing hardware to suit the problem being solved.

Much has been made lately about the fact that microchip production has moved from the United States to Taiwan. How could the US regain its role as a leader in microchip manufacturing?

Leadership in microchip manufacturing today, especially on the high-performance side, requires extraordinary capital investment and a good business case that the products produced can ultimately repay that investment. The extreme cost of EUV equipment, combined with the generally finicky nature of sub-10nm process technology, has driven the cost of a high volume “megafab” semiconductor manufacturing plant to $20 Billion.

Intel historically could support high capital investment because its products combined excellent microarchitecture, circuit design, and process technology, and could take advantage of the volume and economics of the x86 compatible marketplace. With the PC no longer as dominant a player, and with current application software more portable and no longer as dependent on binary compatibility, this traditional business, by itself, is less supportive of the extreme investments required for state-of-the-art semiconductor manufacturing plants. There are still benefits to be had from vertical integration of both development and manufacture, but now the emphasis is on innovation in packaging and system level integration rather than on binary compatibility.

Taiwan Semiconductor Manufacturing Company (TSMC) has succeeded lately at semiconductor manufacture because they have excellent IP libraries, a well-developed foundry interface, and have made leading edge process technology available to a full range of fabless players.

Companies in the USA (including Intel) can succeed in the foundry business as well, but I think it will take a consortium or government investment in IP libraries and tool suites together with subsidies or tax incentives to build those expensive leading-edge manufacturing plants.

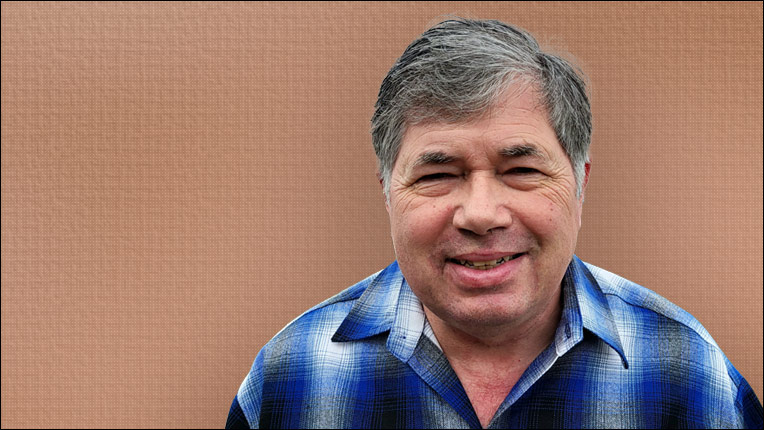

David B. Papworth was employed at Intel Corporation from 1990 to 2020, having served in positions including Principal Processor Architect and Intel Fellow. He has broad experience in CPU microarchitecture, the software/hardware interface, and is listed as co-inventor on more than 50 issued patents for his work.

Papworth is the recipient of the 2022 ACM Charles P. “Chuck” Thacker Breakthrough in Computing Award for fundamental groundbreaking contributions to Intel’s P6 out-of-order engine and Very Long Instruction Word (VLIW) processors. Introduced in 1995, the P6 microprocessor (marketed as the Pentium Pro) fit 5.5 million transistors on a single chip and was significantly faster than its predecessors. The architectural paradigm Papworth and his team developed for the P6 microchip is still in use today. The ACM Breakthrough Award celebrates leapfrog advances in computing.